Deep research is rarely a one-and-done task. It’s a multi-stage process: outlining the plan, discovering insights, evaluating sources, refining follow-ups, synthesizing analysis, and crafting deliverables. On GPU-based systems, completing one stage of these cycles can take 10 minutes—or longer if you loop back with fresh questions. That friction stifles iteration.

Today, NinjaTech AI is proud to announce SuperNinja Fast Deep Research, powered by our strategic partnership with Cerebras — delivering more than 5x faster research loops than traditional models. Now, doing iterative, multi-step explorations in minutes—not tens of minutes—is not only possible, it’s effortless.

Why This Matters Now

SuperNinja Fast Deep Research isn’t just fast. It’s transformation in motion:

- Speed without compromise: Complex sequences of reasoning, source evaluation, and synthesis that once took 10+ minutes now complete in just 1–2 minutes.

- Interactive intelligence: Real-time iteration shifts AI deep research from a static report to a dynamic, on-demand partner.

- No sacrifice on quality: Every answer is grounded in cited sources, structured reasoning, and thorough analysis—ensuring the speed boost doesn’t come at the expense of depth or accuracy.

Benchmarks: Comparable Accuracy, Breakthrough Speed

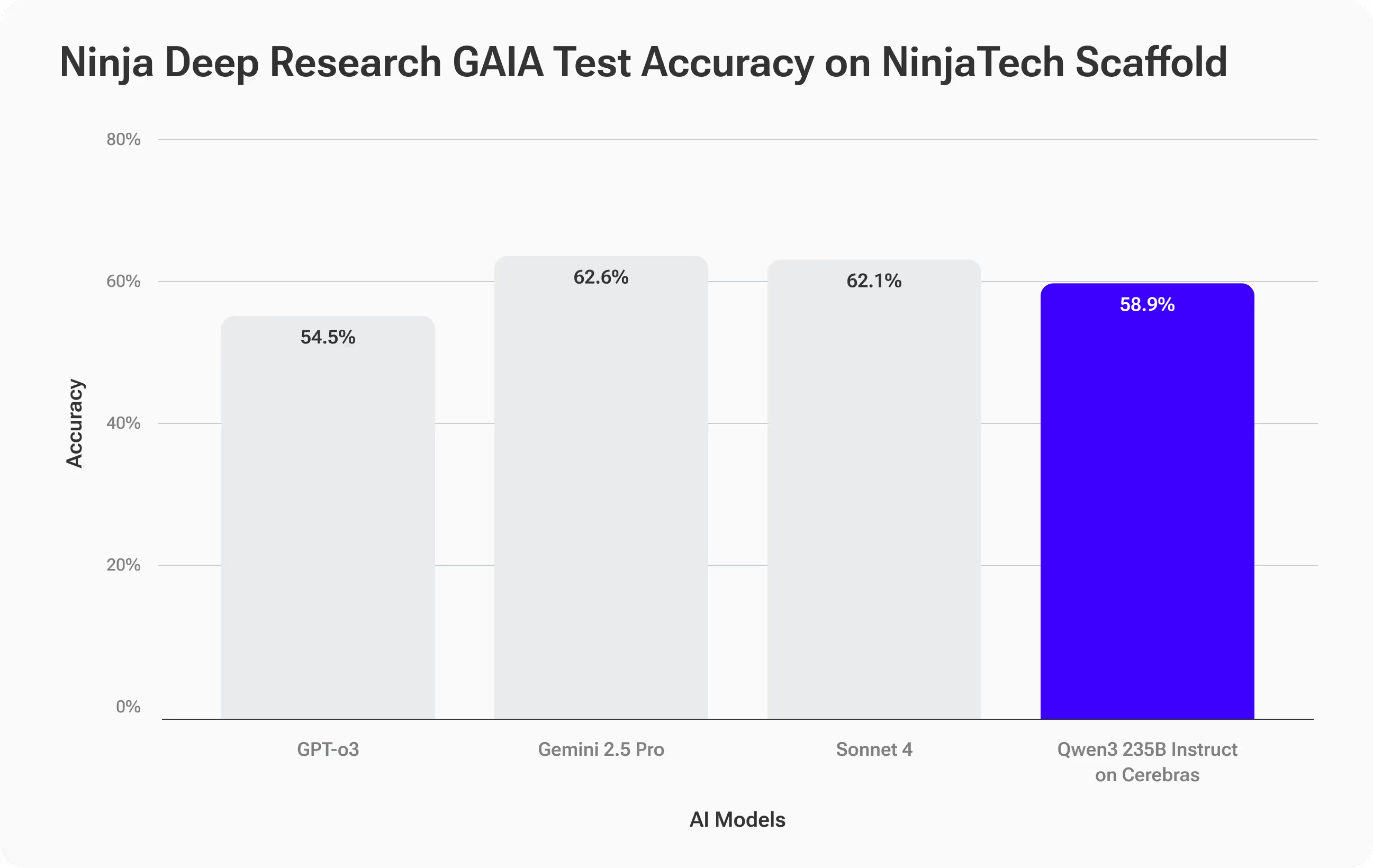

To evaluate both quality and performance, we tested SuperNinja Fast Deep Research, powered by the Qwen3-235B model on Cerebras hardware, using the GAIA benchmark—a challenging, real-world test of multi-step reasoning and tool use (arXiv link).

GAIA tasks are deliberately hard: 466 questions with verifiable answers, designed to measure not just factual recall but the ability to plan, gather sources, reason through complexity, and deliver accurate results.

Accuracy Results — Matching the Best

SuperNinja Fast Deep Research achieved 58.9% accuracy, closely tracking top-tier models like Anthropic’s Sonnet 4 (62.1%) and Gemini 2.5 Pro (62.6%), and outperforming OpenAI’s GPT-O3 (54.5%).

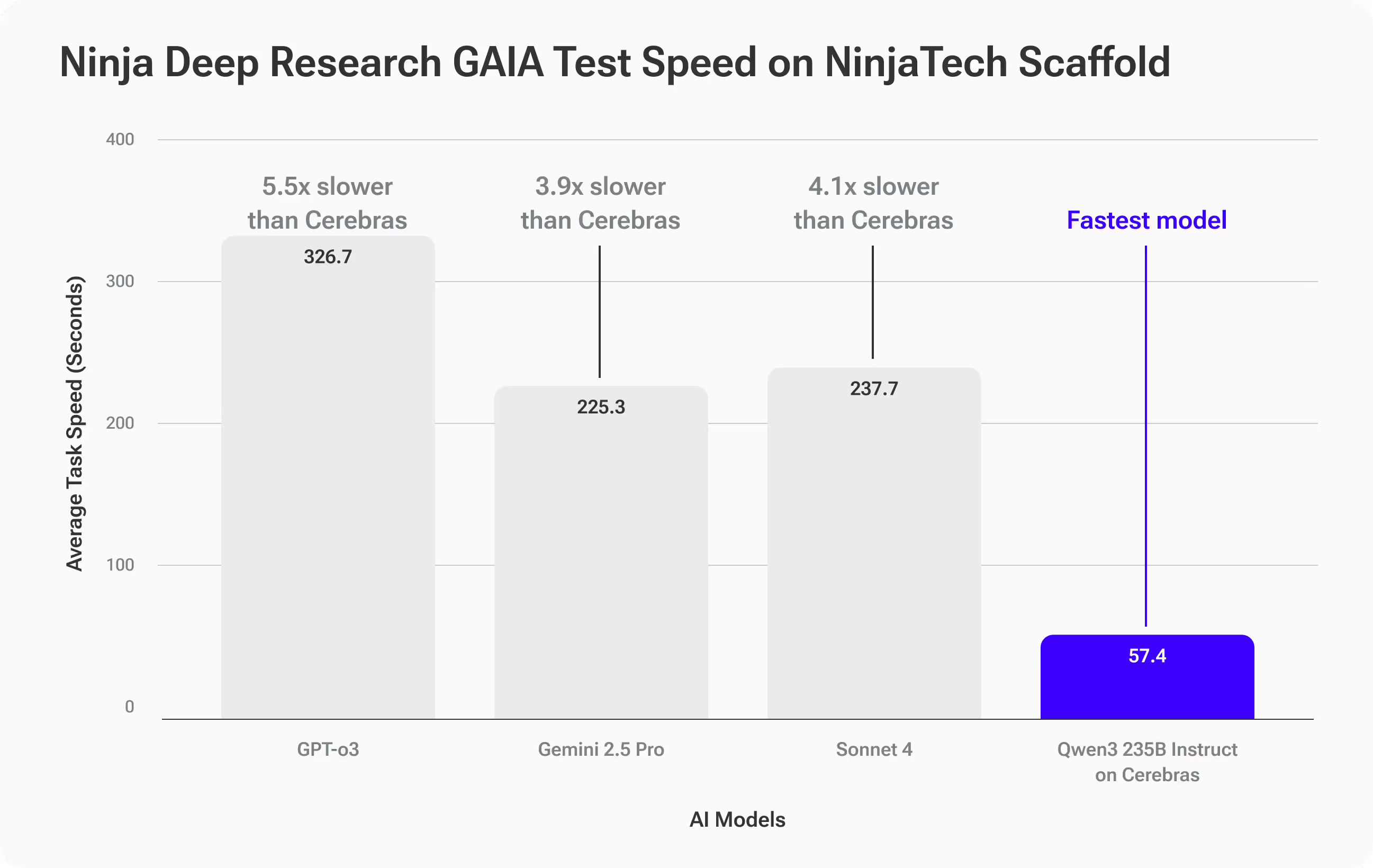

Speed Results — In a Class of Its Own

Where Qwen3-235B on Cerebras truly separated itself was speed:

- Average completion time: 57.4 seconds per GAIA task

- 3.9x faster than Gemini 2.5 Pro (225.3s)

- 4.1x faster than Sonnet 4 (237.7s)

- 5.5x faster than GPT-O3 (316.7s)

What This Means for You

You get accuracy on par with leading frontier models—but with dramatically faster answers. For deep research workflows that involve iteration, this speed unlocks entirely new possibilities: follow-ups, pivots, and deeper dives that would have been too slow before.

Real-World Example: When Speed Determines Strategy

Imagine you’re a brand strategist advising a global consumer goods company. The CMO wants to kick off discussions for a high-stakes corporate rebrand at next week’s leadership summit. You need to walk into that room with data-driven insights—not just opinions.

You start with this deep research prompt:

Research the 10 most notable corporate rebrands from 2010–2025 across multiple industries, using clear criteria to define “notable,” and gather credible, cited and linked sources on measurable changes in sales, market share, customer sentiment, and brand equity. Normalize results for comparability and present them ranked from most to least successful in a table, including all key metrics, brief case summaries for each brand, and at least five actionable, evidence-backed insights I can use to guide a rebrand strategy discussion.

With other AI research tools, you may wait 10-15 minutes for the initial report. If you have follow up questions that require further research, each iteration adds more time.

With SuperNinja Fast Deep Research, each cycle completes in 1–2 minutes, so you can iterate rapidly—refining your criteria, probing edge cases, adding fresh data, and stress-testing your insights in real time. Instead of producing one static report, you build an interactive table with linked sources, comparable metrics, and crisp, evidence-backed takeaways—ready to present and adapt live in the room.

The speed doesn’t just make you faster—it lets you explore more angles, validate more assumptions, and surface strategic insights you wouldn’t have had time to uncover otherwise.

How It Works

Despite major advances in open-source large language models, a persistent performance gap remains between these models and top proprietary systems on complex, long-horizon information retrieval tasks—challenges well-captured by benchmarks like GAIA. Closing that gap requires not just bigger models, but smarter inference.

SuperNinja’s Deep Research system uses a Plan & CodeACT scaffolding framework that turns a base open-source model—such as Qwen3-Instruct 235B—into a goal-driven researcher. This framework executes iterative validation, verification, and replanning loops, generating roughly twice the number of reasoning tokens compared to standard inference. That extra thinking time pays off in accuracy, systematically reducing characteristic model errors and improving performance to match state-of-the-art proprietary models.

We further boost precision through a data-driven optimization layer that customizes tool definitions, parameters, and retrieval strategies based on a customer’s domain and data. These tailored settings make the model more reliable for specialized, high-stakes research tasks.The trade-off? This richer, more rigorous reasoning process adds computational overhead on conventional GPU systems—slowing each iteration due to longer token generation and inter-GPU communication.

That’s where Cerebras’ wafer-scale inference helped us unlock a huge performance gain. By running the entire model entirely in on-chip SRAM, making inference super fast with no inter-GPU bottlenecks, we accelerate the full scaffolding loop by 4–6x compared to traditional systems. This means you get the accuracy of top proprietary models—without sacrificing speed—making iterative, multi-round research cycles practical in real time.

Try SuperNinja’s Fast Deep Research today

SuperNinja Fast Deep Research isn't just a faster tool—it’s a redefinition of how deep research workflows happen. If you value quick, rigorous insight that adapts to your questions in real time, you’ll feel the difference instantly.

Experience what happens when agentic AI meets next-gen speed.