The AI API for Every Model.

One Integration, 40+ Models.

Access the world's leading AI models through a single, fast, and affordable API. Build with GPT, Claude, Gemini, Llama, and more — no need to manage multiple providers.

Unparalleld Speeds

Experience the fastest proprietary and flagship AI models on the market, powered by next-gen chips.

Most Affordable

Achieve high-quality performance at a fraction of the cost compared to other LLM APIs.

Proven Quality

Ninja’s models are rigorously tested against leading AI benchmarks, demonstrating near state-of-the-art performance across diverse domains.

API PRICING

Ninja API Pricing

Access the world's best AI models through a single API at unbeatable prices. Build with Ninja's proprietary models and 40+ leading external models — from text generation and coding to image creation and research.

Mode

Input price / per M tokens

Output price / per M tokens

Price / task

Qwen 3 Coder 480B (Cerebras)

–

–

$1.50

Standard mode

–

–

$1.00

Complex mode

–

–

$1.50

Fast mode

–

–

$1.50

Mode

Input price / per M tokens

Output price / per M tokens

Price / task

Qwen 3 Coder 480B (Cerebras)

$3.75

$3.75

–

Standard mode

$1.50

$1.50

–

Complex mode

$4.50

$22.50

–

Fast mode

$3.75

$3.75

–

Model

Input price / per M tokens

Output price / per M tokens

Turbo 1.0

$0.11

$0.42

Apex 1.0

$0.88

$7.00

Reasoning 2.0

$0.38

$1.53

Deep Research 2.0

$1.40

$5.60

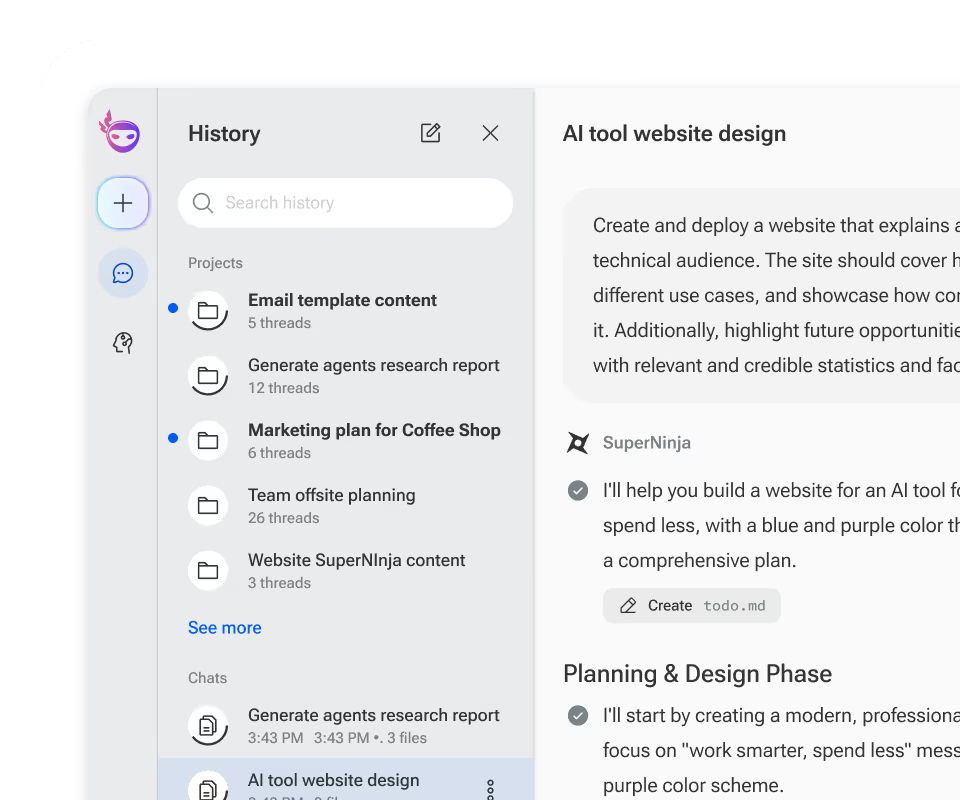

Describe the task. Ninja turns it into an app that runs step by step for you. No credit card required.